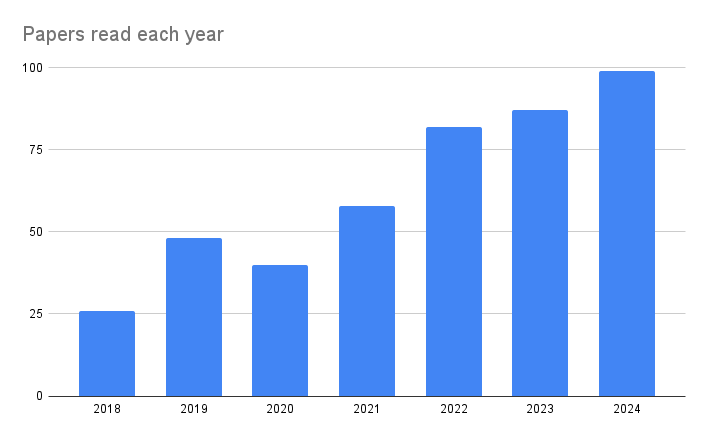

In 2024, I read 99 papers and 21 non-technical books. 99 papers is slightly more than my previous record (87 papers in 2023), while 21 books is slighly less…

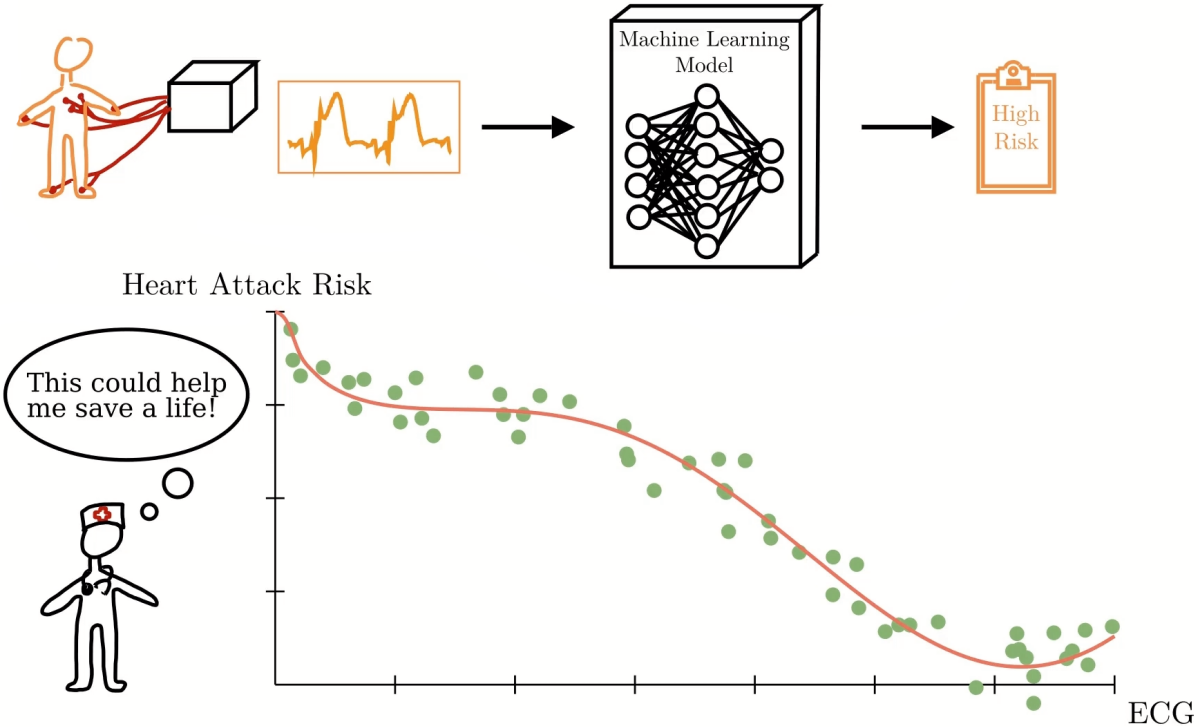

I am a postdoctoral researcher in the group of Mattias Rantalainen at Karolinska Institutet in Stockholm, working on machine learning and computer vision for computational pathology. My research focuses on how to build and evaluate reliable machine learning models, for applications within data-driven medicine and healthcare. It has often included regression problems, uncertainty estimation methods or energy-based models.

I received my BSc in Applied Physics and Electrical Engineering in 2016 and my MSc in Electrical Engineering in 2018, both from Linköping University. During my MSc, I also spent one year as an exchange student at Stanford University. I received my PhD in Machine Learning in 2023 from Uppsala University for the thesis Towards Accurate and Reliable Deep Regression Models. During my PhD, I was supervised by Thomas Schön and Martin Danelljan. I am originally from Trollhättan, Sweden.

[Jan 3, 2025] New blog post: My Year of Reading in 2024.

[Nov 18, 2024] New preprint: Multi-Stain Modelling of Histopathology Slides for Breast Cancer Prognosis Prediction [medRxiv] [project], work lead by Abhinav Sharma.

[Oct 25, 2024] New preprint: Automated Segmentation of Synchrotron-Scanned Fossils [bioRxiv] [project], work lead by Melanie During and Jordan Matelsky.

[Oct 10, 2024] New preprint: Evaluating Computational Pathology Foundation Models for Prostate Cancer Grading under Distribution Shifts [arXiv] [project].

[Oct 1, 2024] New preprint: Evaluating Deep Regression Models for WSI-Based Gene-Expression Prediction [arXiv] [project].

[Sep 16, 2024] New preprint: Taming Diffusion Models for Image Restoration: A Review [arXiv] [project], work lead by Ziwei Luo.

[Jul 3, 2024] Accepted paper: Evaluating Regression and Probabilistic Methods for ECG-Based Electrolyte Prediction, work lead together with Philipp Von Bachmann and Daniel Gedon, has been published in Scientific Reports.

[Apr 15, 2024] Accepted paper: Photo-Realistic Image Restoration in the Wild with Controlled Vision-Language Models has been accepted to CVPR Workshops 2024. This is follow-up work on our ICLR paper, once again lead by Ziwei Luo.

Click here for older news.

1RT495 | Automatic Control II | MSc

Teaching Assistant (Swedish: Lektionsledare)

Spring II 2023

1RT700 | Statistical Machine Learning | MSc

Teaching Assistant

Autumn II 2022

1RT890 | Empirical Modelling | MSc

Teaching Assistant (Swedish: Lektionsledare)

Autumn I 2022

1RT700 | Statistical Machine Learning | MSc

Teaching Assistant

Spring I 2022

1RT890 | Empirical Modelling | MSc

Teaching Assistant (Swedish: Lektionsledare)

Autumn I 2021

1RT495 | Automatic Control II | MSc

Teaching Assistant

Spring II 2021

1RT490 | Automatic Control I | BSc

Teaching Assistant (Swedish: Lektionsledare)

Spring I 2021

1RT890 | Empirical Modelling | MSc

Teaching Assistant (Swedish: Lektionsledare)

Autumn I 2020

1RT700 | Statistical Machine Learning | MSc

Lab Assistant

Spring I 2020

1RT490 | Automatic Control I | BSc

Teaching Assistant (Swedish: Lektionsledare)

Spring I 2020

1RT490 | Automatic Control I | BSc

Teaching Assistant (Swedish: Lektionsledare)

Autumn I 2019

Deep Learning | PhD (Broad)

Teaching Assistant

Spring II 2019

1RT700 | Statistical Machine Learning | MSc

Lab Assistant

Spring I 2019

1RT490 | Automatic Control I | BSc

Teaching Assistant (Swedish: Lektionsledare)

Spring I 2019

TATA24 | Linear Algebra | BSc

Teaching Assistant (Swedish: Mentor)

Autumn 2015

TATM79 | Foundation Course in Mathematics | BSc

Teaching Assistant (Swedish: Handledare)

Autumn 2015

TATA24 | Linear Algebra | BSc

Teaching Assistant (Swedish: Mentor)

Autumn 2014

TAIU10 | Calculus, one variable - Preparatory course | BSc

Teaching Assistant (Swedish: Lektionsledare)

Autumn 2014

102 papers in total.

How Reliable is Your Regression Model’s Uncertainty Under Real-World Distribution Shifts?

RISE Learning Machines Seminars | Online | [slides] [video]

March 21, 2024

How Reliable is Your Regression Model’s Uncertainty Under Real-World Distribution Shifts?

DFKI Augmented Vision Workshop | Online | [slides]

October 31, 2023

Accurate 3D Object Detection using Energy-Based Models

Zenseact | Online | [slides]

January 29, 2021

Evaluating Scalable Bayesian Deep Learning Methods for Robust Computer Vision

Zenuity | Gothenburg, Sweden | [slides]

June 18, 2019

On the Use and Evaluation of Computational Pathology Foundation Models for WSI-Based Prediction Tasks

Scandinavian Seminar on Translational Pathology | Uppsala, Sweden | [slides]

November 23, 2024

Evaluating Computational Pathology Foundation Models for Prostate Cancer Grading under Distribution Shifts

Mayo-KI Annual Scientific Research Meeting | Stockholm, Sweden | [slides]

October 16, 2024

Towards Accurate and Reliable Deep Regression Models

PhD defense | Uppsala, Sweden | [slides] [video]

November 30, 2023

Some Advice for New (and Old?) PhD Students

SysCon μ seminar at our weekly division meeting | Uppsala, Sweden | [slides]

March 16, 2023

Can You Trust Your Regression Model’s Uncertainty Under Distribution Shifts?

SysCon μ seminar at our weekly division meeting | Uppsala, Sweden | [slides]

September 15, 2022

Energy-Based Probabilistic Regression in Computer Vision

Half-time seminar | Online | [slides]

February 3, 2022

Regression using Energy-Based Models and Noise Contrastive Estimation

SysCon μ seminar at our weekly division meeting | Online | [slides]

February 12, 2021

Semi-Flipped Classroom with Scalable-Learning and CATs

Pedagogical course project presentation | Uppsala, Sweden | [slides]

December 18, 2019

Deep Conditional Target Densities for Accurate Regression

SysCon μ seminar at our weekly division meeting | Uppsala, Sweden | [slides]

November 1, 2019

Predictive Uncertainty Estimation with Neural Networks

SysCon μ seminar at our weekly division meeting | Uppsala, Sweden | [slides]

March 22, 2019

Ziwei Luo | PhD student at Uppsala University

Co-supervisor, since Feb 2024

Main supervisor: Thomas Schön, other co-supervisor: Jens Sjölund

Erik Thiringer | MSc Thesis student at Karolinska Institutet

Co-supervisor, since Sep 2024

Main supervisor: Mattias Rantalainen

(Minimum 2 papers, updated 2024-11-18)

Main supervisor: Thomas Schön | PhD in 2006 from Linköping University

Advised by Fredrik Gustafsson (PhD in 1992 from Linköping University), advised by Lennart Ljung (PhD in 1974 from Lund University), advised by Karl Johan Åström (PhD in 1960 from KTH Royal Institute of Technology)

Co-supervisor: Martin Danelljan | PhD in 2018 from Linköping University

Advised by Michael Felsberg (PhD in 2002 from Kiel University) and Fahad Shahbaz Khan (PhD in 2011 from Autonomous University of Barcelona)

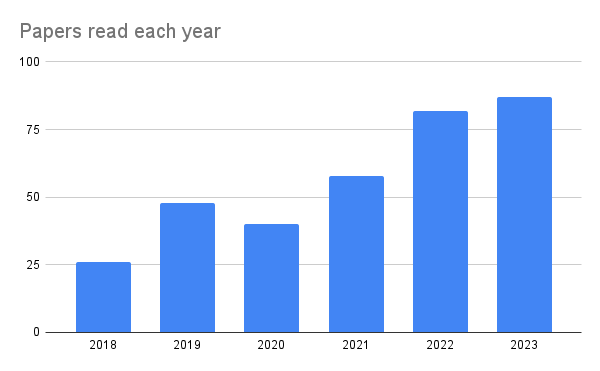

I categorize, annotate and write comments for all research papers I read, and share this publicly on GitHub (440+ papers since September 2018). Feel free to reach out with any questions or suggested reading. In June 2023, I also wrote the blog post The How and Why of Reading 300 Papers in 5 Years about this.

From 2018 to 2023, I organized the SysCon machine learning reading group.

I have also started to really enjoy reading various non-technical book. Since late 2022, I have read the following books (I’m also on Goodreads):

48 books in total.

In 2024, I read 99 papers and 21 non-technical books. 99 papers is slightly more than my previous record (87 papers in 2023), while 21 books is slighly less…

In 2023, I read 87 papers and 26 non-technical books. 87 papers is slightly more than my previous record (82 papers in 2022), and I’ve never even been remotely close to reading 26 books in a year. Deciding to read more books is definitely…

Since I started my PhD almost five years ago, I have categorized, annotated and written short comments for all research papers I read in detail. I share this publicly in a GitHub repository, and recently reached 300 read papers. To mark this milestone, I decided to share some thoughts on why I think it’s important to read a lot of papers, and how I organize my reading. I also compiled some paper statistics, along with a list of 30 papers that I found particularly interesting…

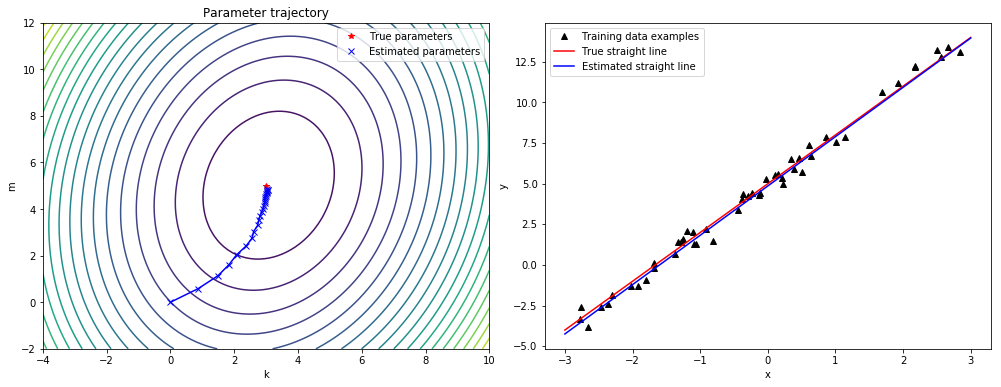

We have created a video in which we try to explain how machine learning works and how it can be used to help doctors. The explanation is tailored to grade 7-9 students, and the idea is that you only should need to know about basic linear functions (straight lines) to understand everything.

When I first got interested in deep learning a couple of years ago, I started out using TensorFlow. In early 2018 I then decided to switch to PyTorch, a decision that I’ve been very happy with ever since…

During the years of my PhD, running turned into an important part of my life, crucial in order to keep me productive and in a good mental state throughout the work days and weeks. I’m a relatively serious runner, but I run mostly just because it’s a lot of fun and a great way to explore your surroundings, and because it’s good for both my physical and mental health. My training can be followed on Strava.

From Sep 10 2020 until Dec 31 2023, I was on a run streak (running at least 2 km outside every day) of 1208 days. I’m currently on a run streak of 300+ days, since Jan 27 2024.

81.5 credits in total.

29 units (58 credits) in total.

277 credits in total.